"I thought this would be easy?" but it turned out to be incredibly difficult 🥺

I'm sure you've thought about collecting a lot of information from the web at least once, right? I recently had to scrape a directory site with 36,000 pages, and at first I thought "I'll just get this done quickly!" 💭

But when I actually tried it... it was way more chaotic than I imagined, and I kept failing over and over 😳

First Attempt: Trying n8n Because It Looked Easy to Use 🎨

First, I thought "This looks easy!" and tried using a tool called n8n.

The flow was: call pages with HTTP requests, process the data a bit with JavaScript, and send it to Google Sheets.

It worked fine for about 20 pages, but...

I realized along the way that the email addresses were encrypted, and most of the data was practically unusable 😭

I was like "Seriously?!" and my motivation plummeted 💦

Next Challenge: Trying the Paid Service "Scraper API" 💳

Next, I tried "Scraper API," which looked like a convenient paid service!

It cost $49 per month for 100,000 credits, and I thought this would definitely work, but I was too optimistic 😂

The site was protected by Cloudflare shield, each request took 40-50 seconds,

the automation kept stopping on its own, and I had to manually restart it repeatedly, which was way too much work.

Plus, the credits disappeared in an instant, and buying more kept getting more expensive... 💸

I was like "This is just for one job! What is this?!" 🥲

What I Finally Ended Up With: My Own Script 👩💻✨

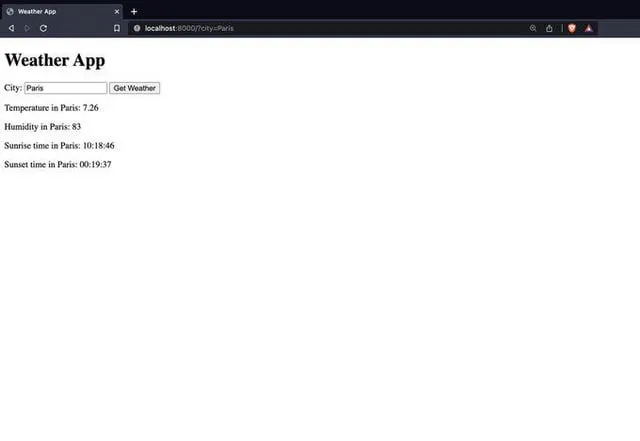

Feeling discouraged, I thought "Maybe I should just make my own?" and for the first time, I wrote scraping code using Puppeteer.

I opened VS Code and:

- Followed pagination to get all the links

- Extracted emails, phone numbers, addresses, and website URLs from each page

- Saved data locally while automatically looping through everything

I honestly thought "Why didn't I do this from the beginning?" 😳

Key Points to Remember for Web Scraping 📝

- Paid tools can be convenient for short tasks but

- When scraping massive numbers of pages at once, you'll hit limits and errors that cause a lot of stress

- Even if it takes time initially, writing your own code often gives you more freedom and stability

- I thought "I'll solve this with money," but I learned that doing it yourself can actually be faster in the end 💡

By the way, it might be a miracle that my laptop didn't break after running for 48 hours straight 🤣

Maybe it's worth gathering a little courage and trying to do it yourself too 🌸

Comments

ロバート

I'm not an expert on scraping, but wasn't using residential IPs the key to success? I hear they're harder to block.

サラ

Scraping has always been a hassle, both now and in the past. When I collected ads from 100 sites, I struggled with iframes and canvas tags. Does anyone know how to take screenshots of canvas?

クリス

Next time, try Ulixee hero. It's more stealthy and free/open source.

ベン

Where did you get 36,000 LinkedIn profile PDFs from?

ジャック

What about the ethics of scraping personal information? I definitely don't want my email taken.

ハンナ

I gave this to a friend who used it on Reddit and really regretted it.

レオ

Back around 2000, I used demo tools in my favorite candidate's town to collect emails and sent 500 promotional emails each. Got quite a few replies, it was interesting.

エイダン

For this level of work, it's too light for a notebook PC to explode (lol)

ハンナ

You're just showing off your intelligence, aren't you?

キンバリー

For Windows, I recommend AutoHotkey. Someone on my team used it to scrape 1.5 million documents. But scraping public sites requires various precautions.

クリス

You definitely need residential IPs, VPS won't work. There's also curl-impersonate, a tool that mimics Chrome or Firefox. There's a Node wrapper too (though I made it myself).

グレース

You could also use wget to download recursively with 10-second waits.

ベン

Tell me more about the script you made.

レオ

I do something similar using 3 local M4 machines to scrape about 50,000 TikTok profiles and 1.5 million posts daily while running other services. Today's PCs are incredibly powerful, and cloud services have gotten too expensive.