Have you heard of "rate limiters"? You've probably seen messages like "Please wait, too many requests" while using apps or services✨

Actually, this is a mechanism that limits the number of requests that can be processed within a certain time period to prevent servers from crashing🧠

This time, I'll roughly explain how to build rate limiters from a system design perspective🌸

What is a Rate Limiter?🤔

Simply put, it's a rule that says "you can only use this ○ times in ○ seconds".

For example:

- Can use API up to 100 times per minute

- Can attempt login up to 1000 times per hour

By applying these limits, we regulate server load💡

Without this, services could crash due to malicious attacks or simply too much traffic😳

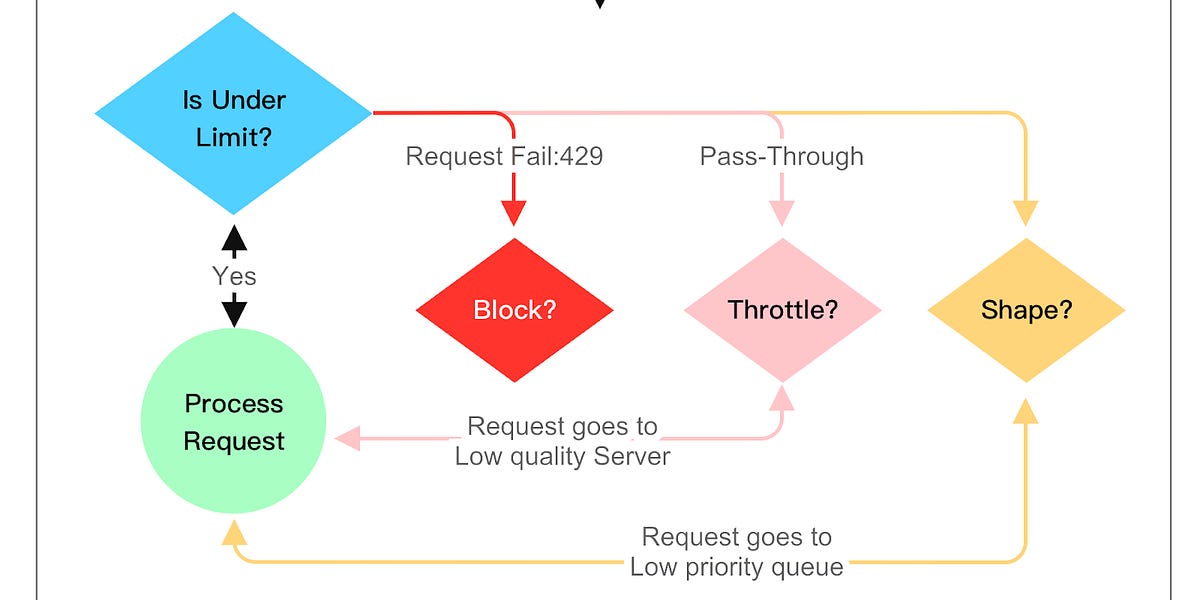

How to Design One? 3 Key Points✨

-

Determine the limit unit

For example, is it "5 times in 10 seconds" or "100 times in 1 hour"? This becomes the basic rule📌 -

Where to count requests

- On the application side?

- On the server side?

- Or at the API gateway?

Most often, counting happens near the server entrance (like API gateways)🧠

-

Counter update method

A mechanism that increments the count with each request and rejects when it exceeds the set limit.

But it's not just about counting - how long we count for is important⚡

3 Commonly Used Algorithms🎀

1. Fixed Window

Counts requests within fixed time windows. For example, resets every minute, counting only requests between 0-59 seconds📅

Simple, but can become lenient at time boundaries when there's heavy traffic😵💫

2. Sliding Window

Constantly checks the number of requests from the past ○ seconds.

Reduces the time boundary problem, but calculations are a bit more complex✨

3. Token Bucket

Imagine storing tokens (permits) in advance and consuming tokens when requests come.

Requests are rejected if there are no tokens. Tokens are replenished over time🎈

This can handle occasional bursts (sudden high traffic) too♪

How to Actually Implement?🔧

- Manage counts in memory or databases

Fast counting stores like Redis are often used📚 - Data synchronization is challenging in distributed systems

With multiple servers, coordination is needed on where to count💭 - Including limit information in responses is considerate

Telling users "You have X requests left" makes them feel more secure🥺

How to Answer in Interviews?🧠

I was also like "What's that?" at first, but

"Rate limiters are mechanisms to prevent excessive access by applying time-based limits" is a good explanation,

- And mentioning that implementations include algorithms like Fixed Window or Token Bucket should be fine👌

Also, adding a word about the challenges of count synchronization in distributed environments and solutions for them would be great✨

Rate limiters might seem modest, but they're essential mechanisms for service stability💭

When you learn about them, they're simpler than expected and you'll think "Oh, I get it now," so if it comes up in interviews, just calmly explain🫶

Comments

チャーリー

So helpful! I'm really grateful!